The marketing world has been abuzz about ChatGPT for a hot minute. And content marketers feel threatened. But is ChatGPT going to replace us? Here’s my take: Don’t worry – yet. Read on to learn why.

What is ChatGPT?

ChatGPT is a chatbot that uses artificial intelligence (AI) to answer questions conversationally. The bot is trained to learn what humans mean when they ask questions. Its human-like responses are eerie. But even more shocking is how fast ChatGPT answers. Ask for a sourdough recipe, who had the most walks at-bat in 1940 or whether crocuses are native to West Virginia, and you’ll find out instantly. Need a poem for English class or JavaScript code for a website? The bot will spit both out in seconds.

Who can use ChatGPT?

Anyone can use ChatGPT for free because it’s a work in progress. All you have to do is create an OpenAI account and then log in. I’ve tested the bot for about two weeks and haven’t had much trouble accessing it. During high-demand periods, however, you might have to refresh your browser and try again later. If you have trouble using the bot because it’s “at capacity,” you can pay $20 a month for ChatGPT Plus. The paid subscription gives you access during peak times and faster answers.

Yesterday, OpenAI announced that it’s upgraded the technology that powers the chatbot. The new system, GPT-4, is what you’ll get if you opt for the paid ChatGPT Plus subscription. One caveat: There’s a usage cap that OpenAI will adjust based on demand and performance. Currently, you can’t access GPT-4 with the free version of ChatGPT.

Why are content marketers worried?

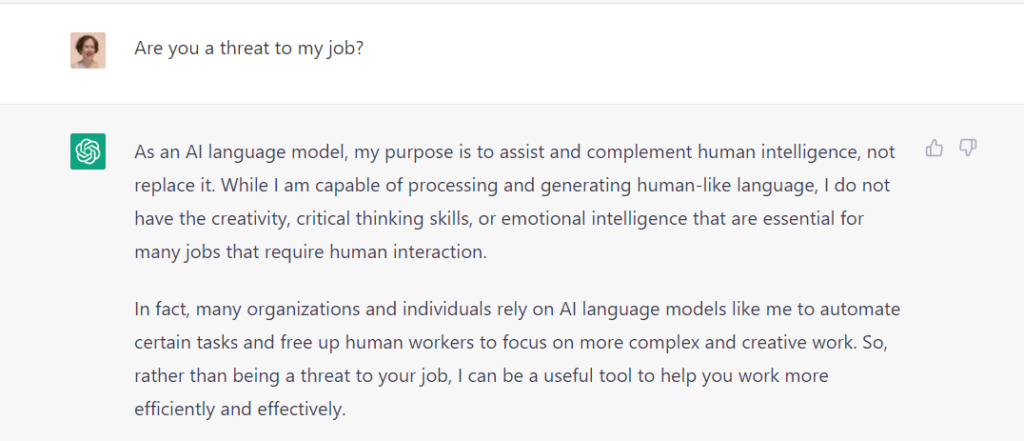

No matter how skilled you are as a content marketer, it’s impossible to write an 800-word article, a 15-page e-book or 20 social media posts on any topic in a minute or less. ChatGPT does, and that’s what makes it scary. Moreover, the writing is simple and free of buzzwords and jargon. The bot’s speed and efficiency are impressive. Does this mean AI will replace content strategists and creators? I was curious to see what the bot “thinks” about that. The answer was wordy. And keep in mind, I didn’t specify my job.

Phew! I feel so much better. Despite ChatGPT’s long-winded response, no one knows if the future could look like a version of “The Terminator” or “2001: A Space Odyssey.” Some workforce experts believe white-collar jobs are most in jeopardy because they’re ideal for automation. I started writing about AI in marketing five years ago, when the technology was ramping up. It’s not like a wave of layoffs occurred because the bots took over.

But today, the technology is more sophisticated, and companies are increasingly looking for ways to cut costs. The business benefit is that AI doesn’t call in sick or take holidays. One bot can displace up to 30 times the work of a human full-time employee, Gartner found. Many copywriters, in particular, are nervous about AI making them obsolete.

In short, the jury’s out for now. My take is that humans still have the edge. Next, I’ll explain why.

ChatGPT has limitations

While ChatGPT is fast, it’s not foolproof. I tested it in many ways by asking:

- Simple questions in English

- More complex questions in English

- Questions in French and Irish

- For long-form articles

- For multiple banner ads on one topic

- For a brochure

Let’s explore the issues I found:

1. The bot isn’t so smart (for now).

Beyond basic questions like what started the Great Chicago Fire of 1871 or who painted “Mona Lisa,” the bot’s answers were sometimes wrong. While the answers seemed plausible, I knew better. For example, I asked the bot to write a 500-word article about why the NOVUX™ ecosystem is breakthrough technology and how it will change network connectivity in the future.

What I got back “sounded” great if you didn’t know anything about the topic. However, I’d done a deep dive into NOVUX with subject experts to write a brochure and a microsite last year, so I knew ChatGPT was incorrect. It seems to think NOVUX is a type of digital money. Nope! NOVUX is telecommunications technology.

ChatGPT wrote:

“The NOVUX blockchain is a distributed ledger that stores all transactions and interactions between users within the NOUX ecosystem. The blockchain ensure transparency, security, and immutability of data. … “The NOVUX token is the native cryptocurrency of the NOVUX ecosystem. It serves as a means of exchange, enabling users to transact and interact with the ecosystem.”

It produced other information about NOVUX that wasn’t correct. Others have also pointed out false answers to different questions since ChatGPT’s launch. The bottom line: Bots tend to present misinformation as fact.

OpenAI, the company that created ChatGPT, admits the problem in its “Limitations” section. Certainly, a critical and unanswered question about ChatGPT is what the source of truth for the bot’s answers will be. Furthermore, who will determine the source (or sources) of truth and how will they do so? It’s clear that ChatGPT is still learning. In time, it might become “smarter.”

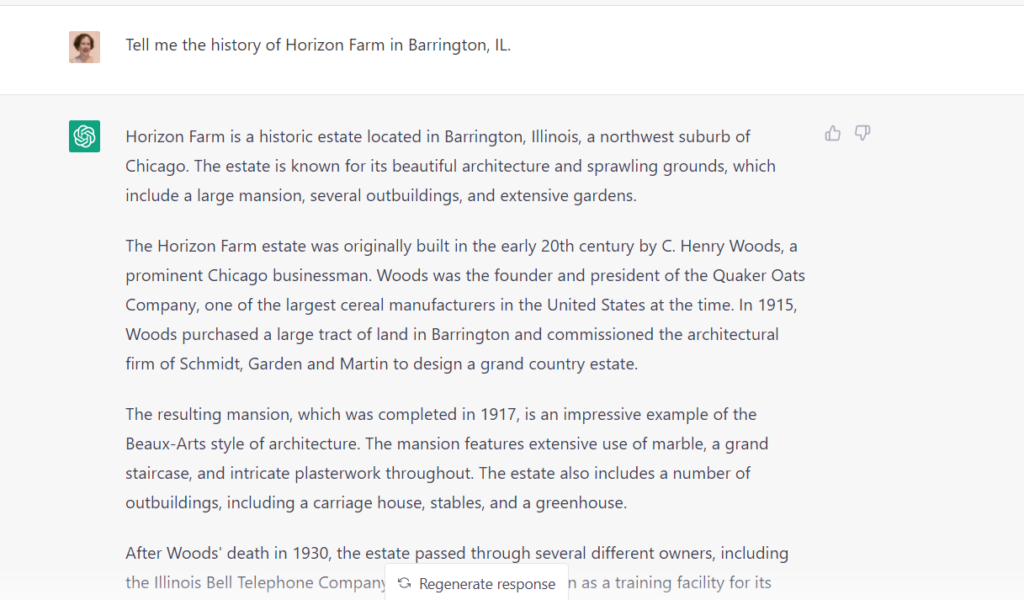

I also asked the bot to share the history of Horizon Farm in Barrington, Illinois. Having grown up on the farm (which wasn’t Horizon Farm then), I know much of the information it provided is bogus.

2. The writing is overly simplistic.

When I graduated from college, reading comprehension was said to be at an eighth-grade level. Between the time I left journalism and became a marketer, it was at a sixth-grade level. Now, the rule of thumb is to write for fourth graders. ChatGPT’s content for the articles and brochure I asked for seemed even more basic. I’m giving away my age, but it’s as if my 9-year-old self opened up an Encyclopedia Britannica hardback from the school library and copied the text word for word.

I understand that accessibility is important. I also get that content creators must appeal to broad audiences. But certain types of content and readers need more sophisticated writing than a bot can produce. I shudder to think what ChatGPT would do with a 2,000-word thought leadership article on complex biomedical engineering challenges.

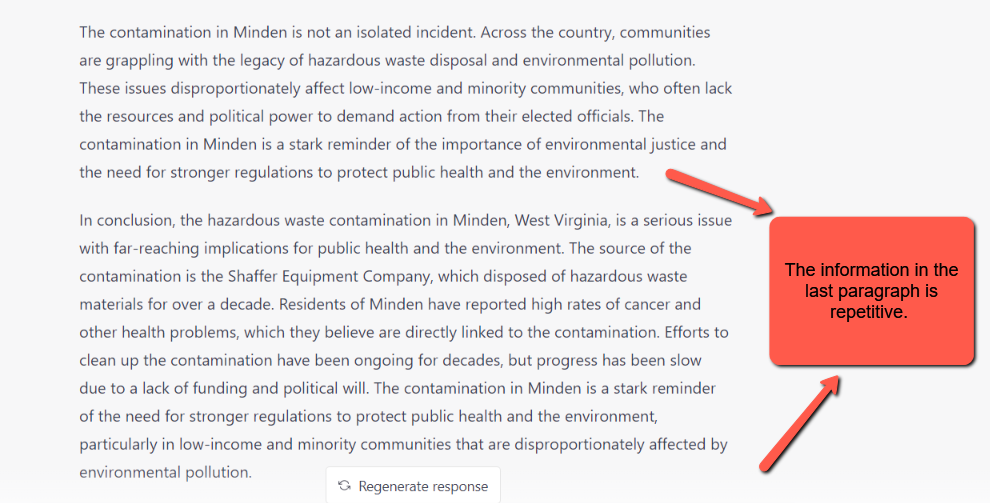

3. The content is formulaic.

After testing ChatGPT’s ability to write articles, brochures and banner ads, patterns emerged. The bot used a templated approach. Take an article, for example. It wrote a headline, a basic introduction and then listed bulleted or numbered points. Next, it provided a summary. Then, it basically repeated the content that it had already shared for the article’s conclusion.

Similarly, for the banner ads, every headline suggestion included two parts separated by a colon. The headlines were too long and weren’t much different from one another. I “ordered” it to write headlines without the colons, but the bot ignored me and did it anyway. I was curious to see what would happen if I restricted the word count. Next, I told it to write the headlines for the same topic using 10 words or less. Success! ChatGPT provided a few suggestions.

4. ChatGPT doesn’t cite sources.

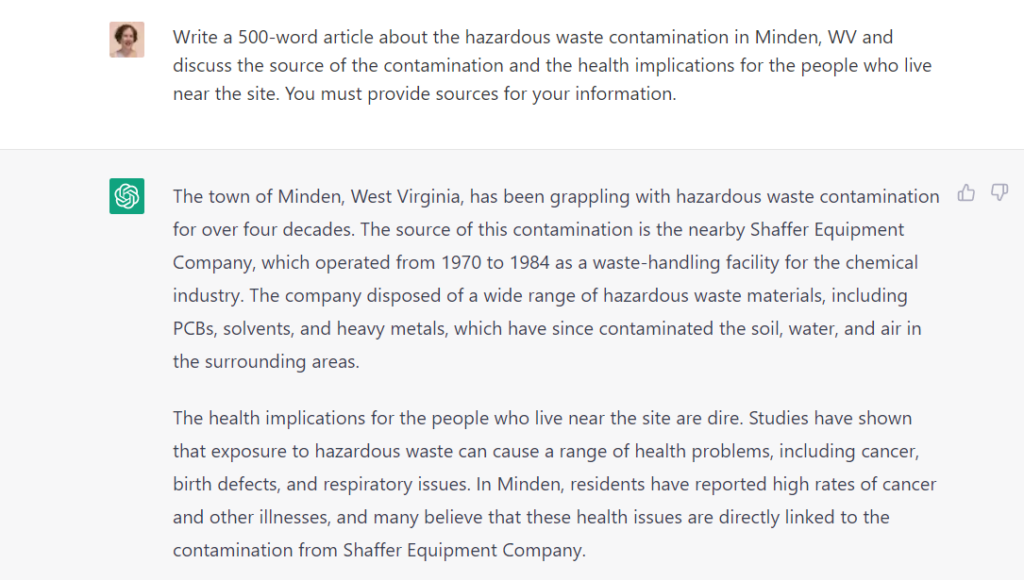

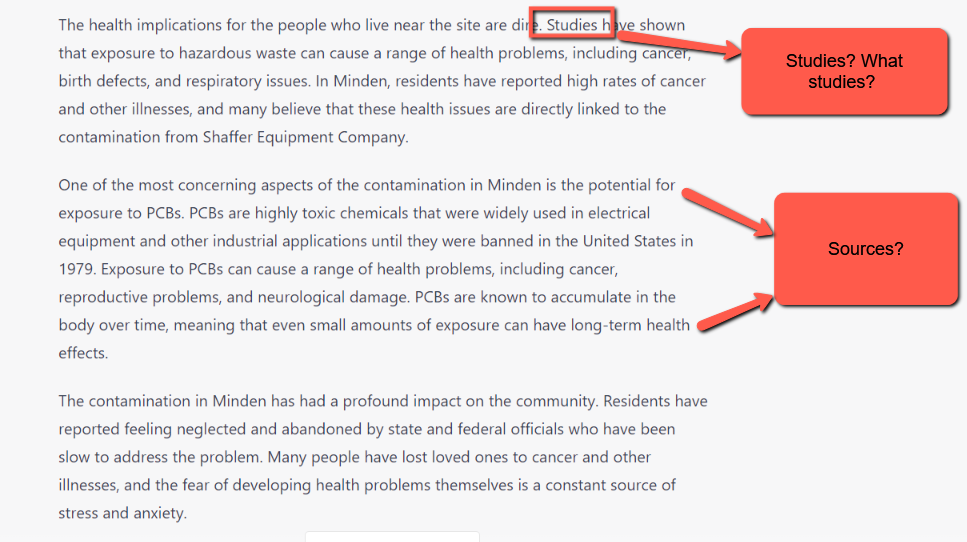

No matter the topic I asked ChatGPT to write about, the bot didn’t provide sources. It wasn’t clear where it pulled information from and whether it was accurate. For one of my tests, I asked the bot to write a 500-word article about a toxic waste site that I covered as a journalist. I wanted to see what ChatGPT would do with details about the health implications for those who lived nearby. As is often the case with hazardous waste sites, the answers aren’t clear.

The bot produced an article, but it was vague and lacked sources. Next, I asked for an article on the same topic and “told” it to include citations and data. I thought for sure that would work. It didn’t. In my third and final attempt, I wrote, “you must provide sources for your information.” Guess what? ChatGPT ignored me. It also repeated content.

I’ve written about the importance of citing original sources for content. At this point, I wouldn’t be comfortable publishing anything the bot produced without verifying the information, unless it’s common knowledge / widely accepted.

A former co-worker who publishes content online that attracts as many as 10,000 visitors a day learned recently how AI content can backfire. A freelance writer sent him a short story that a bot wrote. The story read well and seemed credible. After he posted it, a subject expert contacted him to ask where the information came from. The expert told him it was incorrect. My friend regrets his mistake and won’t be doing that again.

As we used to say in journalism, “If your mother says she loves you, check it out.” And if ChatGPT says your mother loves you, don’t believe it!

5. ChatGPT gives conflicting answers.

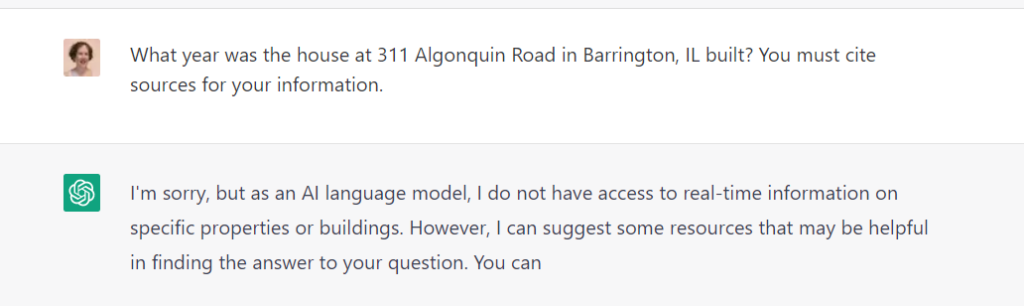

Among the simple questions I asked ChatGPT was when our house in Barrington, Illinois, was built. I provided the street address. The first time, the bot told me 1912, but it didn’t provide a source. Two or three days later, I tried again and got this response:

OpenAI addresses this weakness on its website by saying that ChatGPT is “sensitive” to tweaks in phrasing or attempts to ask a question multiple times. Initially, the bot may claim to not have an answer, but ask a second time with a change in wording, and the bot can answer correctly.

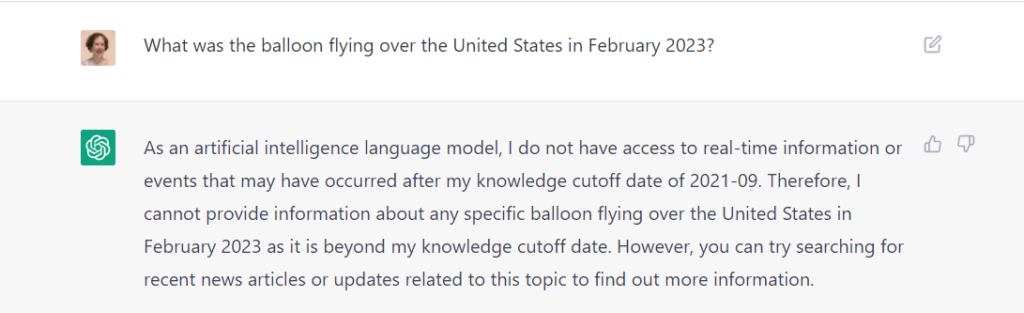

6. ChatGPT can’t share real-time information.

The data available is limited to 2021, so the bot won’t tell you who won last week’s basketball game or who just announced a run for U.S. president.

7. ChatGPT isn’t always fast.

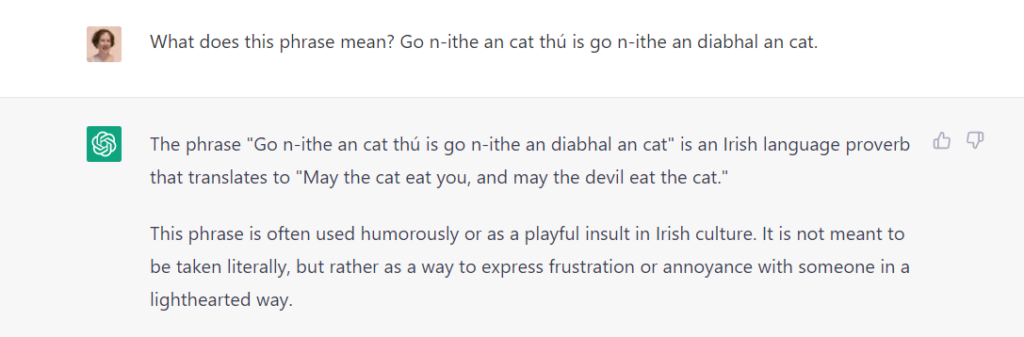

In my experiments, ChatGPT “choked” when I asked questions in languages other than English or asked it to translate an English phrase into another language. The pauses were long enough that I thought the bot might not be able to process them. I played around with French and Irish. In particular, it stumbled on an Irish proverb. However, I tried it again later, and the answer came much faster.

This is a great segue to the next issue with ChatGPT.

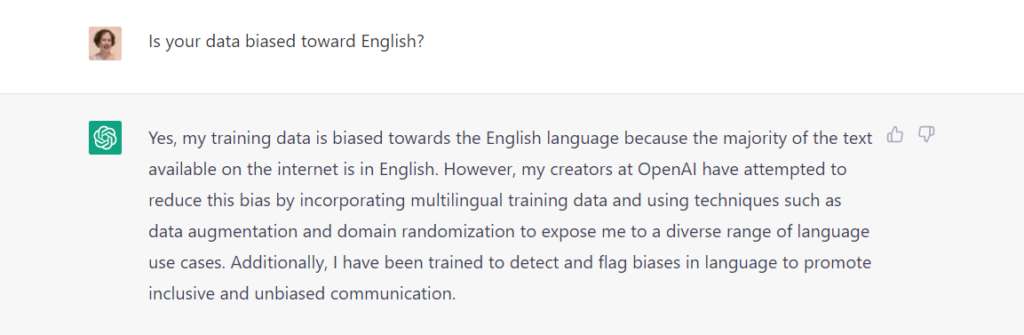

8. The bot comes with biased baggage.

Today, diversity, equity and inclusion are the forefront, particularly to support underserved and marginalized communities. Unfortunately, ChatGPT has a bias toward English. While English is the most spoken language in the world, according to Statista, the bot could reinforce inequality and discrimination by giving preference to those whose first language is English.

What about GPT-4?

I’m gleeful that GPT-4 has some of the same flaws as ChatGPT. Score 2 for the humans! OpenAI admits that while the upgraded technology exhibits “human-level performance,” GPT-4 isn’t reliable because it “hallucinates” facts and makes reasoning errors. In short, AI doesn’t understand what’s true and what isn’t. It makes stuff up that you’d swear seems right. OpenAI said the bot doesn’t “learn from its experience.” Well, thank goodness for that (insert smile here).

If you’re still nervous about the technology, here are three more reasons to take a deep breath:

- Like ChatGPT, GPT-4 is biased.

- The upgraded bot has no knowledge of events before September 2021 (same with ChatGPT).

- GPT-4 is slower than the “older” bot.

OpenAI will, no doubt, be working on these issues. It’s difficult to predict how far and how fast developers will be able to take the technology. However, keep in mind they released GPT-4 just four months after ChatGPT.

Are the bots coming for us?

Some experts think AI-written content could reshape marketing and content creation as we know it. However, we have at least one advantage. Namely, bots don’t have human cognitive abilities. People are still necessary for critical thinking, creativity, imagination and inspiration. Relish that thought as OpenAI and other bot developers build out and refine the technology.